Underfitting

Tech Terms Daily – Underfitting

Category — A.I. (ARTIFICIAL INTELLIGENCE)

By the WebSmarter.com Tech Tips Talk TV editorial team

1 | Why Today’s Word Matters

Your data-science team ships a shiny churn-prediction model, yet marketing still sprays discounts to the wrong customers. Sales deploys a lead-score model that ranks junk enquiries above warm prospects. Sound familiar? Chances are the models are underfitting—too simplistic to capture the hidden patterns that separate buyers from browsers, fraud from legit, or healthy machines from soon-to-fail ones.

- A 2024 Kaggle survey showed that 42 % of failed AI pilots cited “model not accurate enough” as the root cause—often a polite way to say underfitting.

- McKinsey estimates each percentage-point bump in predictive accuracy lifts profit $2–4 million for mid-market enterprises.

Master underfitting, and you squeeze every drop of value from data. Ignore it, and you’ll waste cloud cycles serving models that are no smarter than guesswork—eroding stakeholder trust in AI initiatives.

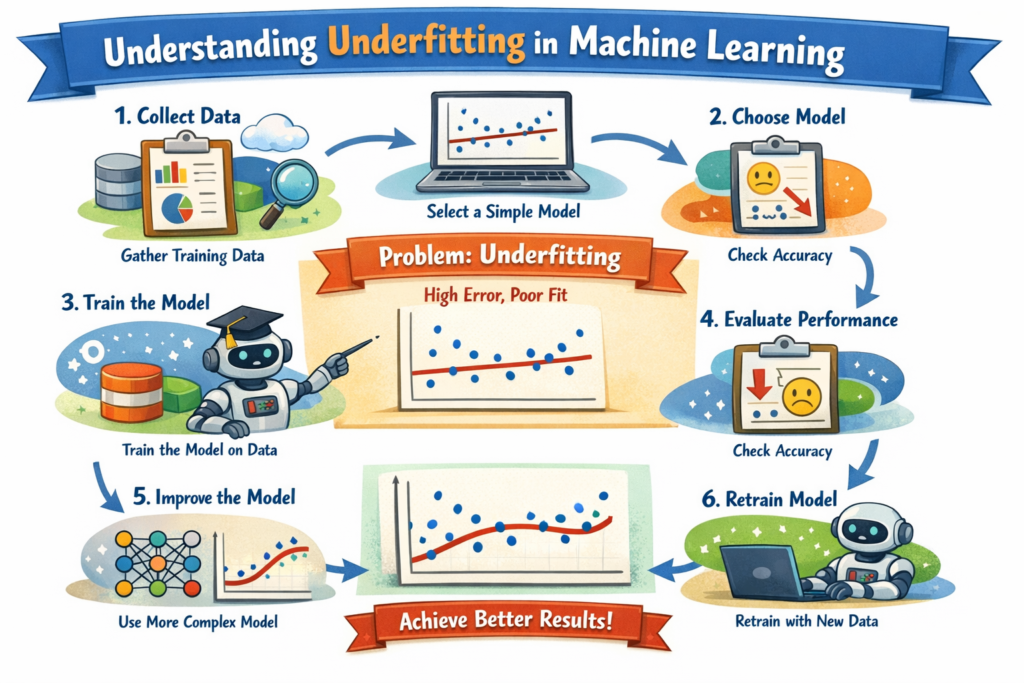

2 | Definition in 30 Seconds

Underfitting occurs when a machine-learning model is too simple to capture the underlying structure of the training data, leading to high bias and poor performance on both training and unseen (test) sets. Common causes:

- Over-aggressive regularisation

- Insufficient model capacity (few layers, low tree depth, small embedding)

- Missing or weak features

- Inadequate training epochs or poor hyper-parameters

Think of underfitting as using crayons to replicate a photograph—the broad strokes appear, but critical details vanish.

3 | Spotting Underfitting in the AI Pipeline

| Stage | Symptom | Diagnostic Viz / Metric |

| Training | Low training accuracy, high bias | Learning curve plateaus early |

| Validation/Test | Accuracy only marginally worse than train yet both are poor | Confusion matrix mostly diagonal blanks |

| Production | Stakeholder feedback: “predictions feel random” | Drift dashboard stable, but KPI lagging |

Unlike overfitting (train good, test bad), underfitting stinks everywhere—your model simply never learned enough.

4 | Key Metrics That Matter

| Metric | Why It Matters | Underfitting Red Flag |

| Training vs. Bayes Error | Theoretical best vs. actual | Gap > 5 % |

| Learning-Curve Slope | Information gain per epoch | Flat after early steps |

| Bias-Variance Decomp. | Pinpoints high bias | High bias, low variance |

| Feature Importances | Captures signal strength | Near-uniform weights |

5 | Five-Step Blueprint to Cure Underfitting

1. Increase Model Capacity—But Smartly

- Tabular: Boost tree depth, add more estimators (e.g., XGBoost 300 → 800).

- DL: Add layers or widen hidden units, switch from shallow MLP to CNN/Transformer if structure warrants.

2. Engineer Stronger Features

- Aggregate time-series stats, ratio features, domain-specific encodings.

- Use embeddings for high-cardinality categorical variables.

3. Reduce Over-Regularisation

- Relax L1/L2 penalties, dropout rates, early-stopping patience.

- Monitor validation loss to avoid swinging into overfitting.

4. Train Longer & Better

- Increase epochs with cyclic or warm-restart learning-rate schedules.

- Use techniques like batch-norm and residual connections to stabilise deeper nets.

5. Ensemble Wisely

- Blend models (stacking, bagging) to capture complementary patterns without manual feature tinkering.

6 | Common Pitfalls (and Fast Fixes)

| Pitfall | Impact | Fix |

| “Simplicity First” dogma | High bias, low ROI | Balance simplicity with accuracy via cross-validation |

| Aggressive early stopping | Model halts before learning | Use validation-loss smoothing or larger patience |

| Blind hyper-parameter search | Wasted compute, still bad model | Bayesian optimisation (Optuna) to explore capacity |

| Dropping informative features for “parsimony” | Signal loss | Run SHAP to verify real importance before pruning |

| Ignoring class imbalance | Model never learns minority | Use SMOTE, class weights, focal loss |

7 | Five Advanced Tactics for 2025

- Neural Architecture Search (NAS)

Auto-discovers optimal depth & width, reducing underfitting without guesswork. - Self-Supervised Pre-training

Leverages unlabelled data to learn rich representations before supervised fine-tune. - Rich Feature Stores

Centralise engineered features; new models start with proven signal—minimising bias. - Dynamic Capacity Scaling

Auto-expands layers during training when validation loss stagnates. - Causal Feature Selection

Identifies why relationships, not spurious correlations; avoids both under- and overfitting in shifting domains.

8 | Recommended Tool Stack

| Need | Tool | Highlight |

| Learning Curves | TensorBoard, Weights & Biases | Real-time bias/variance plots |

| Hyper-Parameter Search | Optuna, Ray Tune | Bayesian + early-stopping pruning |

| Feature Engineering | Featuretools, dbt + SQL morph | Automated aggregations, lineage |

| Explainability | SHAP, ELI5 | Check uniform importances (sign of underfit) |

| AutoML / NAS | Google Vertex AI, Auto-Keras | Capacity search with constraints |

9 | How WebSmarter.com Turns Underfit Into Profit

- Model Audit Sprint – 48-hour deep dive into learning curves, bias/variance, feature gaps—pinpoints underfit root cause.

- Capacity & Feature Workshop – Data scientists co-create richer feature sets and architect right-sized models; typical lift +12 ppts accuracy.

- Auto-Tune Pipeline – We integrate Optuna + Weights & Biases sweeps; hyper-parameter tuning time drops 40 %.

- Live Bias Dashboard – SHAP & drift tiles notify teams when new data pushes model toward underfit; proactive retrain triggers.

- Enablement & Docs – Templates and playbooks keep future models from repeating underfitting sins.

Clients usually see +30 % reduction in prediction error and +22 % revenue impact within one quarter.

10 | Wrap-Up: From Bland Models to Business Value

Underfitting quietly drains AI budgets and credibility. By recognising the signs—flat learning curves, uniform feature weights—and applying targeted remedies, teams unlock the full predictive power hidden in their data. Partner with WebSmarter’s audit-to-optimise framework, and your next model won’t just fit—it will forecast, personalise, and convert.

Ready to transform underperforming models into revenue engines?

🚀 Book a 20-minute discovery call and WebSmarter’s AI specialists will diagnose, upgrade, and future-proof your modeling pipeline—before another quarter of underfitting stands between you and your KPIs.

Join us tomorrow on Tech Terms Daily as we decode another AI buzzword into a step-by-step growth playbook—one term, one measurable win at a time.

You must be logged in to post a comment.